Convolutional Neural Networks (CNNs) have revolutionized various fields, particularly computer vision, with their ability to extract meaningful features from input data. Among the diverse array of convolutional layers, the seemingly modest 1×1 convolution has garnered significant attention for its remarkable versatility and impact on model performance.

Diving Deeper into Convolution:

Before delving into the intricacies of 1×1 convolutions, it’s crucial to solidify our understanding of convolutional operations within neural networks. Convolutional layers leverage learnable filters to perform localized operations on input data, enabling the network to extract hierarchical features and patterns. These layers form the backbone of CNNs, facilitating tasks such as image recognition, object detection, and semantic segmentation with remarkable efficiency.

Unpacking the 1×1 Convolution:

At first glance, the 1×1 convolutional layer may appear inconspicuous due to its diminutive size. However, beneath its simplicity lies a wealth of computational power and flexibility. Unlike traditional convolutions that operate on larger spatial regions, the 1×1 convolution focuses solely on individual elements within the input tensor. This unique characteristic endows it with several distinct properties and applications that set it apart within the realm of deep learning.

Mechanics:

Despite its compact size, the 1×1 convolutional layer possesses several key attributes that contribute to its effectiveness:

- Dimensionality Transformation: One of the primary functions of 1×1 convolutions is to perform dimensionality transformation within neural network architectures. By adjusting the depth of feature maps while preserving spatial information, these convolutions enable efficient representation learning and network optimization.

- Parameter Efficiency: Due to their small receptive field, 1×1 convolutions require significantly fewer parameters compared to larger convolutions. This parameter efficiency not only reduces computational complexity but also mitigates the risk of overfitting, thereby enhancing the generalization capability of the model.

- Network Compression: In addition to dimensionality transformation, 1×1 convolutions play a pivotal role in network compression and optimization. By incorporating these convolutions strategically within the architecture, researchers can reduce the computational burden without sacrificing model performance, making them particularly valuable in resource-constrained environments.

- Channel-wise Transformation: One of the primary functions of 1×1 convolutions is to perform channel-wise transformations. By applying filters of size 1×1, these convolutions alter the depth of the input tensor while preserving spatial information. This allows for efficient dimensionality reduction or expansion within the network architecture.

- Non-linear Transformations: Although 1×1 convolutions may appear linear due to their small receptive field, they can incorporate non-linearities through the activation functions applied after the convolution operation. Common activation functions like ReLU (Rectified Linear Unit) introduce non-linearities, enabling the model to learn complex relationships within the data.

Exploring Applications:

The versatility of 1×1 convolutions extends far beyond their role as mere dimensionality transformers. Across various domains and applications, these convolutions find innovative uses that push the boundaries of neural network design:

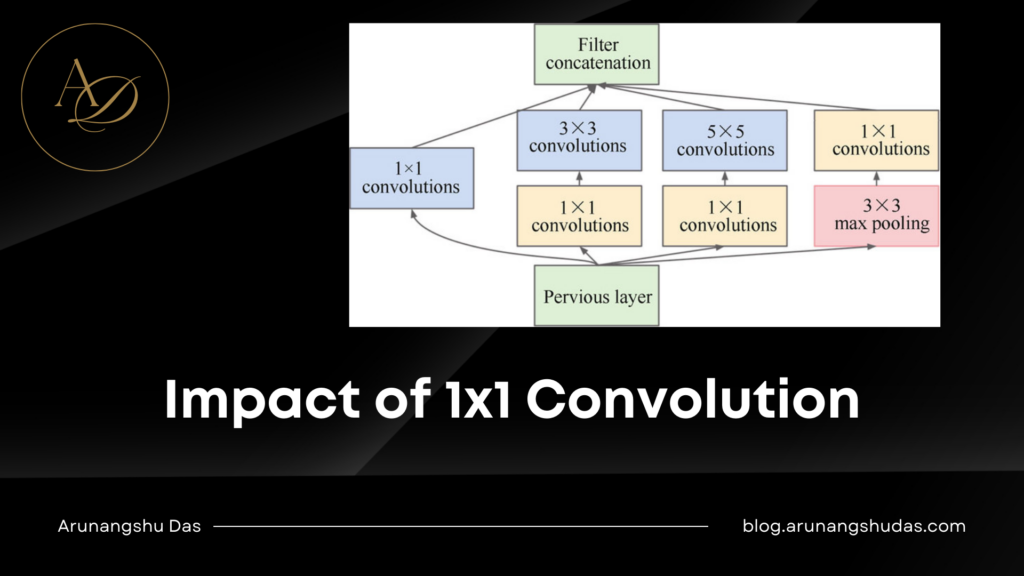

- Bottleneck Architectures: In architectures such as ResNet and MobileNet, 1×1 convolutions are frequently employed in bottleneck structures to reduce the computational cost of deeper networks. By employing a sequence of 1×1, 3×3, and 1×1 convolutions, these architectures strike a balance between model depth and computational efficiency, paving the way for state-of-the-art performance in image classification and other tasks.

- Feature Fusion and Attention Mechanisms: Recent advancements in neural network architectures have leveraged 1×1 convolutions for feature fusion and attention mechanisms. By integrating these convolutions within attention modules, models can dynamically adjust feature representations based on their importance, leading to enhanced performance in tasks such as image captioning, machine translation, and visual question answering.

- Semantic Segmentation and Object Detection: In the realm of semantic segmentation and object detection, 1×1 convolutions play a crucial role in refining feature maps and aggregating information across spatial dimensions. Architectures like U-Net and Mask R-CNN leverage these convolutions to enhance spatial resolution, improve localization accuracy, and facilitate precise object delineation in complex scenes.

As we conclude our journey through the realm of 1×1 convolutions, it becomes evident that their significance transcends their modest size. From efficient dimensionality transformation to network compression and beyond, these convolutions have cemented their place as indispensable tools in the arsenal of deep learning practitioners. As researchers continue to innovate and explore new frontiers in neural network design, the versatility and impact of 1×1 convolutions are poised to shape the future of AI and drive advancements across diverse domains.